Predibase Fine-Tuning Index Ranks Best Open-source LLMs for Common Task Types

Predibase Fine-Tuning Index Ranks Best Open-source LLMs for Common Task Types

Shows how most fine-tuned open-source LLMs outperform GPT-4 on specialized tasks and cost orders of magnitude less to train and serve

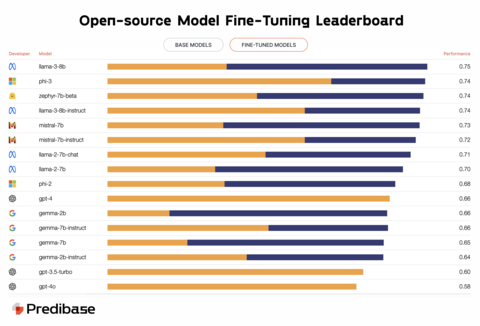

SAN FRANCISCO--(BUSINESS WIRE)--Predibase, the developer platform for fine-tuning and serving LLMs, today launched the Fine-Tuning Index to showcase how fine-tuning open source LLMs dramatically improves their performance for production applications, ranking the top LLMs by performance for various tasks. Drawing from over 700 fine-tuning experiments, this comprehensive resource is designed to aid enterprise AI teams in selecting the optimal open-source model for their specific applications and reports the performance of 13 of the most popular open-source LLMs across 31 distinct tasks compared to leading commercial LLMs. View the index here.

“Most organizations recognize that open-source LLMs are closing the performance gap between commercial models like GPT-4, but many are surprised when they learn that open-source LLMs already significantly outperform GPT-4 when fine-tuned for specific applications,” said Dev Rishi, co-founder and CEO of Predibase.

Furthermore, teams often don’t know which open-source LLM will perform best for their set of tasks. While there may be a general feeling that certain LLMs may be more performant out of the box, the nuances in performance between base models and fine-tuned LLMs across different task types has never been studied and reported on in aggregate. The Fine-Tuning Index helps teams more confidently select the most appropriate open-source LLM for a given application so they can spend less time in trial and error comparing model results and get to production faster with the right fine-tuned LLM.

Key findings from the research powering the Fine-Tuning Index include:

- Outperformance of GPT-4: The majority of fine-tuned open-source models exhibited superior performance compared to GPT-4 and GPT-4o, with Llama 3, Phi-3, and Zephyr leading the pack.

- Cost-Effectiveness: Fine-tuned models proved to be not only more cost-effective but also faster to train and serve, with GPT-4 costing orders of magnitude more per month for a given enterprise use case. Plus, fine-tuning each LLM for a typical task only cost about $8 in terms of compute.

- Specialized Task Superiority: Fine-tuned LLMs excel in specialized tasks, such as legal contract review and medical classification, surpassing the performance of GPT-4 on 85% of tested tasks.

- Optimal Base Models: Llama 3, Phi-3, and Zephyr architectures emerged as the top choices for fine-tuning, offering superior performance across various tasks.

These findings are discussed in greater detail in a report recently published by the Predibase research team titled “LoRA Land: 310 Fine-tuned LLMs that Rival GPT-4, A Technical Report.” Predibase's research not only showcases the capabilities of open-source LLMs but also provides valuable insights and tools for organizations looking to leverage these models effectively. By democratizing access to advanced language models and empowering developers with cost-effective solutions, Predibase is paving the way for teams bringing AI products to market.

About Predibase

Predibase is the fastest and most efficient way for developers to build their own specialized LLMs in the cloud. As the developer platform for fine-tuning and serving LLMs, Predibase makes it easy for engineering teams to fine-tune and serve any open-source AI model in their own cloud or on state-of-the-art serverless infrastructure. Predibase is trusted by organizations ranging from Fortune 500 enterprises through innovative startups like Sekure Payments, OnGrid, AirMDR and Prosperia Health. Built by the team that created the internal AI platforms at Apple and Uber, Predibase is fast, efficient, and scalable for any size job. Most importantly, Predibase is built on open-source foundations and can be deployed in your cloud so all of your data and models stay in your control.

For more information or to get started with a free trial, visit http://www.predibase.com or follow @predibase.

Contacts

Kevin Pedraja

Voxus PR

kpedraja@voxuspr.com