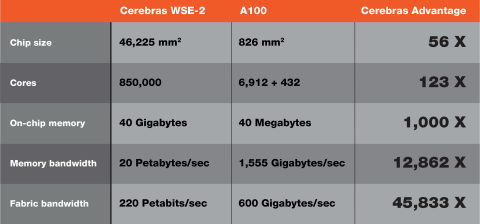

LOS ALTOS, Calif.--(BUSINESS WIRE)--Cerebras Systems, a company dedicated to accelerating Artificial Intelligence (AI) compute, today unveiled the largest AI processor ever made, the Wafer Scale Engine 2 (WSE-2). Custom-built for AI work, the 7nm-based WSE-2 delivers a massive leap forward for AI compute, crushing Cerebras’ previous world record with a single chip that boasts 2.6 trillion transistors and 850,000 AI optimized cores. By comparison the largest graphics processor unit (GPU) has only 54 billion transistors – 2.55 trillion fewer transistors than the WSE-2. The WSE-2 also has 123x more cores and 1,000x more high performance on-chip high memory than GPU competitors.

The WSE-2 will power the Cerebras CS-2, the industry’s fastest AI computer, designed and optimized for 7nm and beyond. The CS-2 more than doubles the performance of Cerebras’ first-generation CS-1, thanks to the super-charged WSE-2. Manufactured by TSMC on its industry-leading 7nm-node, the WSE-2 more than doubles all performance characteristics on the chip - the transistor count, core count, memory, memory bandwidth and fabric bandwidth - over the first generation WSE. The result is that on every performance metric, the WSE-2 is orders of magnitude larger and more performant than any competing GPU on the market.

“Less than two years ago, Cerebras revolutionized the industry with the introduction of WSE, the world’s first wafer scale processor,” said Dhiraj Mallik, Vice President Hardware Engineering, Cerebras Systems. “In AI compute, big chips are king, as they process information more quickly, producing answers in less time – and time is the enemy of progress in AI. The WSE-2 solves this major challenge as the industry’s fastest and largest AI processor ever made.”

“TSMC has long partnered with the industry innovators to manufacture advanced processors with leading performance. We are pleased with the result of our continuous collaboration with Cerebras Systems in manufacturing the Cerebras WSE-2 on our 7nm process, another extraordinary accomplishment and milestone for wafer scale development after the introduction of the Cerebras 16nm WSE less than two years ago,” said Sajiv Dalal, Senior Vice President of Business Management, TSMC North America. “TSMC’s leading-edge technology, manufacturing excellence, and rigorous attention to quality enable us to meet Cerebras’ stringent defect density requirements and support them as they continue to unleash silicon innovation.”

With every component optimized for AI work, the CS-2 delivers more compute performance at less space and less power than any other system. Depending on workload, from AI to HPC, CS-2 delivers hundreds or thousands of times more performance than legacy alternatives, and it does so at a fraction of the power draw and space. A single CS-2 replaces clusters of hundreds or thousands of graphics processing units (GPUs) that consume dozens of racks, use hundreds of kilowatts of power, and take months to configure and program. At only 26 inches tall, the CS-2 fits in one-third of a standard data center rack.

Over the past year, customers around the world have deployed the Cerebras WSE and CS-1, including Argonne National Laboratory, Lawrence Livermore National Laboratory, Pittsburgh Supercomputing Center (PSC) for its groundbreaking Neocortex AI supercomputer, EPCC, the supercomputing centre at the University of Edinburgh, pharmaceutical leader GlaxoSmithKline, Tokyo Electron Devices, and more.

“At GSK we are applying machine learning to make better predictions in drug discovery, so we are amassing data – faster than ever before – to help better understand disease and increase success rates,” said Kim Branson, SVP, AI/ML, GlaxoSmithKline. “Last year we generated more data in three months than in our entire 300-year history. With the Cerebras CS-1, we have been able to increase the complexity of the encoder models that we can generate, while decreasing their training time by 80x. We eagerly await the delivery of the CS-2 with its improved capabilities so we can further accelerate our AI efforts and, ultimately, help more patients.”

“As an early customer of Cerebras solutions, we have experienced performance gains that have greatly accelerated our scientific and medical AI research,” said Rick Stevens, Argonne National Laboratory Associate Laboratory Director for Computing, Environment and Life Sciences. “The CS-1 allowed us to reduce the experiment turnaround time on our cancer prediction models by 300x over initial estimates, ultimately enabling us to explore questions that previously would have taken years, in mere months. We look forward to seeing what the CS-2 will be able to do with more than double that performance.”

Cerebras’ pioneering work has won nearly every award in the industry, including the Global Semiconductor Alliances (GSA) Startup To Watch, Fast Company’s Best World Changing Ideas, Fast Company’s World’s Most Innovative Companies, IEEE Spectrum’s Emerging Technology Awards, Forbes AI 50 2020, HPCwire’s Readers’ and Editors’ Choice Awards, CBInsights AI 100, amongst many others.

“In August 2019, Cerebras delivered the Wafer Scale Engine (WSE), the only trillion-transistor processor in existence at that time,” said Linley Gwennap, principal analyst, The Linley Group. “Now the company has doubled this success with the WSE-2, which pushes the transistor count to 2.6 trillion. This is a great achievement, especially when considering that the world’s third largest chip is 2.55 trillion transistors smaller than the WSE-2.”

For more information, please visit https://cerebras.net/product/.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to build a new class of computer to accelerate artificial intelligence work by three orders of magnitude beyond the current state of the art. The CS-2 is the fastest AI computer in existence. It contains a collection of industry firsts, including the Cerebras Wafer Scale Engine (WSE-2). The WSE-2 is the largest chip ever built. It contains 2.6 trillion transistors and covers more than 46,225 square millimeters of silicon. The largest graphics processor on the market has 54 billion transistors and covers 815 square millimeters. In artificial intelligence work, large chips process information more quickly producing answers in less time. As a result, neural networks that in the past took months to train, can now train in minutes on the Cerebras CS-2 powered by the WSE-2.